Multitask Prediction of Exchange-level Annotations for Multimodal Dialogue Systems

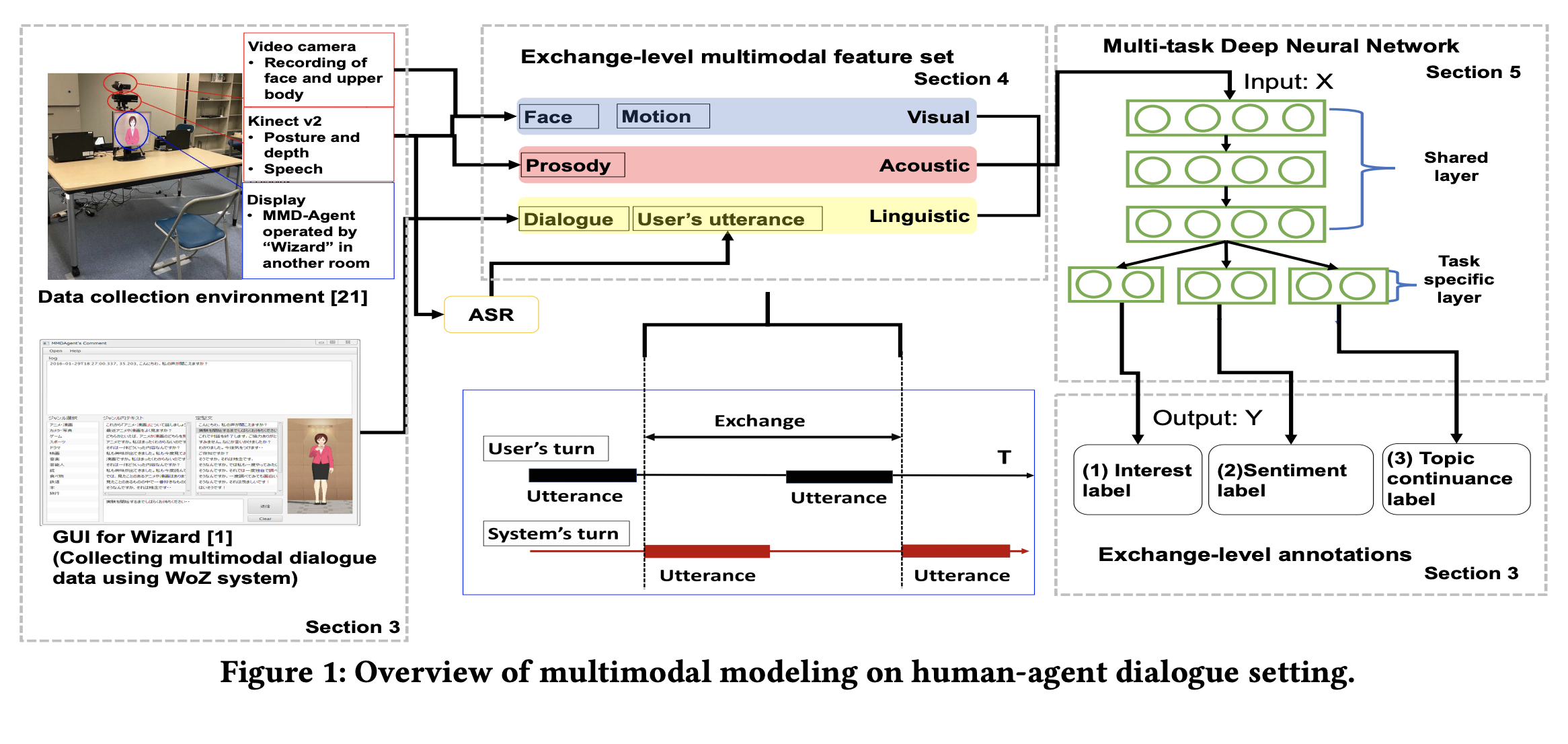

This paper presents multimodal computational modeling of three labels that are independently annotated per exchange to implement an adaptation mechanism of dialogue strategy in spoken dialogue systems based on recognizing user sentiment by multimodal signal processing.

The three labels include (1) user’s interest label pertaining to the current topic, (2) user’s sentiment label, and (3) topic continuance denoting whether the system should continue the current topic or change it. Predicting the three types of labels that capture different aspects of the user’s sentiment level and the system’s next action contribute to adopting a dialogue strategy based on the user’s sentiment.

For this purpose, we enhanced shared multimodal dialogue data by annotating impressed sentiment labels and the topic continuance labels. With the corpus, we develop a multimodal prediction model for the three labels. A multitask learning technique is applied for binary classification tasks of the three labels considering the partial similarities among them. The prediction model was efficiently trained even with a small data set (less than 2000 samples) thanks to the multitask learning framework. Experimental results show that the multitask deep neural network (DNN) model trained with multimodal features including linguistics, facial expressions, body and head motions, and acoustic features, outperformed those trained as single-task DNNs by 1.6 points at the maximum.

full version link