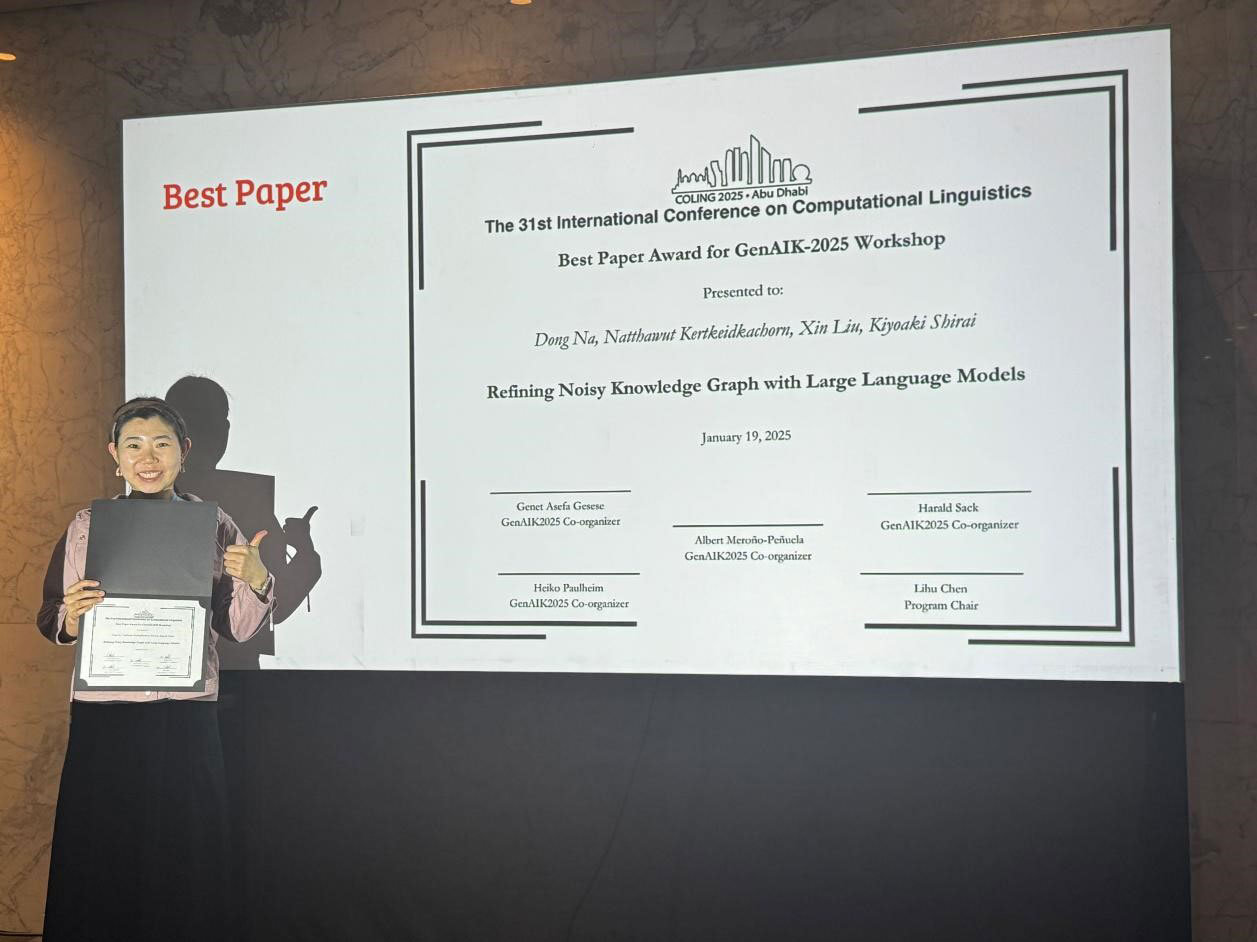

学生のDONGさんがGenAIK-2025においてBest Paper Awardを受賞

学生のDONG, Naさん(博士後期課程2年、人間情報学研究領域、白井研究室)がThe 31st International Conference on Computational Linguistics (COLING 2025)と併催されたワークショップGenerative AI and Knowledge Graphs (GenAIK-2025)においてBest Paper Awardを受賞しました。

COLING 2025は、計算言語学および自然言語処理(NLP)の分野における最前線の研究、技術革新、応用事例を共有し、議論するための主要な国際会議で、令和7年1月19日~24日にかけて、アラブ首長国連邦(アブダビ)で開催されました。同会議では、研究者、実務者、教育者、学生が一堂に会し、言語技術の進化が社会や産業にもたらす可能性に関する最新の研究成果について議論が行われました。

GenAIK-2025はCOLING 2025と併催されたワークショップで、ディープラーニング、ナレッジグラフ、NLPコミュニティ間の関係を強化し、GenAI分野における学際的な研究を促進することを目的としています。

※参考:COLING 2025

GenAIK-2025

■受賞年月日

令和7年1月19日

■研究題目、論文タイトル等

Refining Noisy Knowledge Graph with Large Language Models

■研究者、著者

Dong Na, Natthawut Kertkeidkachorn, Xin Liu (AIST), Kiyoaki Shirai

■受賞対象となった研究の内容

Knowledge graphs (KGs) represent structured real-world information composed by triplets of head entity, relation, and tail entity. These graphs can be constructed automatically from text or manually curated. However, regardless of the construction method, KGs often suffer from misinformation, incompleteness, and noise, which hinder their reliability and utility. This study addresses the challenge of noisy KGs, where incorrect or misaligned entities and relations degrade graph quality. Leveraging recent advancements in large language models (LLMs) with strong capabilities across diverse tasks, we explore their potential to detect and refine noise in KGs. Specifically, we propose a novel method, LLM_sim, to enhance the detection and refinement of noisy triples. Our results confirm the effectiveness of this approach in elevating KG quality in noisy environments. Additionally, we apply our proposed method to Knowledge Graph Completion (KGC), a downstream KG task that aims to predict missing links and improve graph completeness. Traditional KGC methods assume that KGs are noise-free, which is unrealistic in practical scenarios. Our experiments analyze the impact of varying noise levels on KGC performance, revealing that LLMs can mitigate noise by identifying and refining incorrect entries, thus enhancing KG quality.

■受賞にあたって一言

I feel incredibly fortunate to have had the opportunity to attend COLING 2025. I would like to express my heartfelt gratitude to my advisors, Associate Professor Kiyoaki Shirai and Senior Lecturer Natthawut Kertkeidkachorn, for their unwavering support, which made my participation in this prestigious conference possible. During the conference, I had the chance to meet many outstanding individuals and gain valuable insights into various remarkable research works. These experiences have been deeply inspiring and serve as a significant motivation for my future endeavors. What makes this event even more unforgettable is that our work, "Refining Noisy Knowledge Graph with Large Language Models," was honored with the Best Paper Award for the GenAIK-2025 Workshop. Sharing our research insights and receiving constructive feedback from experts in the field was both exhilarating and inspiring. This recognition motivates me to strive for even higher-quality research in the future.

令和7年2月7日