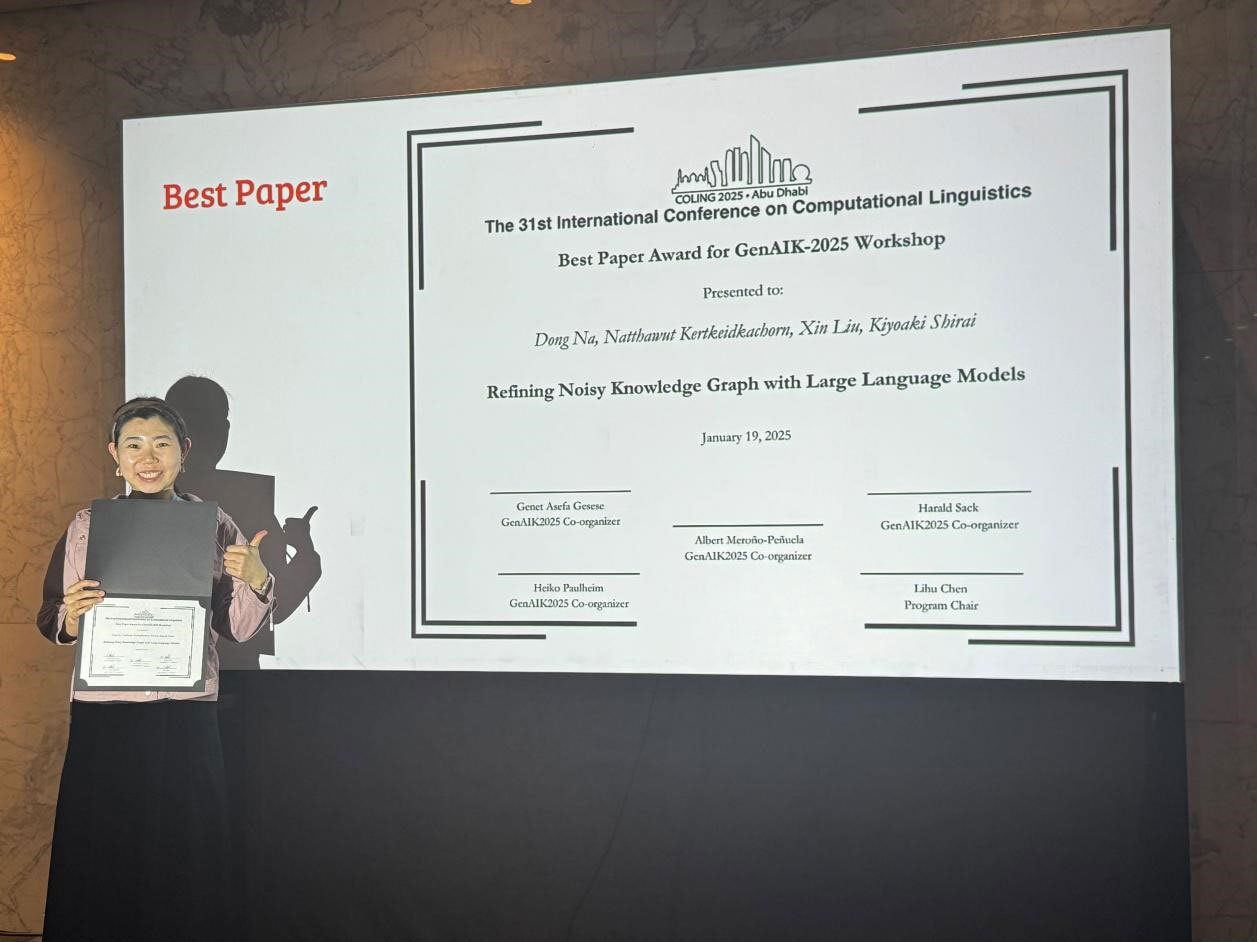

Ms. DONG received Best Paper Award in GenAIK-2025 workshop

Ms. DONG, Na (2nd-year doctoral student in Shirai Lab, Human Information Science Research Area) received Best Paper Award in Generative AI and Knowledge Graphs (GenAIK-2025) workshop, which was held in conjunction with the 31st International Conference on Computational Linguistics (COLING 2025).

COLING 2025 is an international conference on computational linguistics and natural language processing, was held in Abu Dhabi, United Arab Emirates from January 19 to 24, 2025.

GenAIK-2025 Workshop, aims to reinforce the relationships between Deep Learning, Knowledge Graphs, and NLP communities and foster inter-disciplinary research in the area of GenAI.

■Date Awarded

January 19, 2025

■Title

Refining Noisy Knowledge Graph with Large Language Models

■Authors

Dong Na, Natthawut Kertkeidkachorn, Xin Liu (AIST), Kiyoaki Shirai

■Abstract

Knowledge graphs (KGs) represent structured real-world information composed by triplets of head entity, relation, and tail entity. These graphs can be constructed automatically from text or manually curated. However, regardless of the construction method, KGs often suffer from misinformation, incompleteness, and noise, which hinder their reliability and utility. This study addresses the challenge of noisy KGs, where incorrect or misaligned entities and relations degrade graph quality. Leveraging recent advancements in large language models (LLMs) with strong capabilities across diverse tasks, we explore their potential to detect and refine noise in KGs. Specifically, we propose a novel method, LLM_sim, to enhance the detection and refinement of noisy triples. Our results confirm the effectiveness of this approach in elevating KG quality in noisy environments. Additionally, we apply our proposed method to Knowledge Graph Completion (KGC), a downstream KG task that aims to predict missing links and improve graph completeness. Traditional KGC methods assume that KGs are noise-free, which is unrealistic in practical scenarios. Our experiments analyze the impact of varying noise levels on KGC performance, revealing that LLMs can mitigate noise by identifying and refining incorrect entries, thus enhancing KG quality.

■Comment

I feel incredibly fortunate to have had the opportunity to attend COLING 2025. I would like to express my heartfelt gratitude to my advisors, Associate Professor Kiyoaki Shirai and Senior Lecturer Natthawut Kertkeidkachorn, for their unwavering support, which made my participation in this prestigious conference possible. During the conference, I had the chance to meet many outstanding individuals and gain valuable insights into various remarkable research works. These experiences have been deeply inspiring and serve as a significant motivation for my future endeavors. What makes this event even more unforgettable is that our work, "Refining Noisy Knowledge Graph with Large Language Models," was honored with the Best Paper Award for the GenAIK-2025 Workshop. Sharing our research insights and receiving constructive feedback from experts in the field was both exhilarating and inspiring. This recognition motivates me to strive for even higher-quality research in the future.

February 7, 2025