We studying a wide range of topics related to image/video processing and user interfaces

YOSHITAKA Laboratory

Associate Professor:YOSHITAKA Atsuo

E-mail:

[Research areas]

Image Processing, Affective Information Processing, User Interfaces

[Keywords]

Deep learning, video analysis, object detection and identification, medical image processing, gaze analysis, shooting and editing support systems

Skills and background we are looking for in prospective students

In addition to a basic understanding of image processing, human visual system, and user interfaces, students are expected to be able to implement program of image processing with Python or other languages. The knowledge and abilities required will vary depending on the research topic.

What you can expect to learn in this laboratory

The laboratory will foster logical thinking skills in recognizing and solving technical/theoretical problems related to object detection, object identification, image generation, and user interface implementation with Python, C++, etc. Image processing using machine learning such as deep learning; user interface design and implementation methods that take human behavior and the human visual system into account. Students will develop logical thinking skills to recognize and solve problems related to image processing systems. In addition, since the process of research activities requires study and understanding of not only Japanese papers but also papers published in English journals and major international conferences abroad, students may expect to improve their English language skills in the field of science and technology through research discussions with international students. Regardless of the research topic, students will also develop their skill to think logically, recognize issues, and solve problems.

Research outline

The amount of image/video information we can access is increasing year by year, and the importance of technology to accurately find the necessary information from the large volume of information is growing. In addition, it has become a part of daily communication that ordinary people, who are not experts in image/video production, record images using video cameras and smartphones, and share them with many people through SNS. In order to establish an image/video processing system that helps people's intellectual activities and contributes to higher quality, a system based on an information processing model that is in line with the way people view or perceive information is demanded.

1. Innovation on Image/Video Accessing Environment

The application of deep learning to image processing has resulted in significant improvements in object detection/ identification performance. We are also studying on methods to visualize more precisely the basis for decisions when deep learning is applied to image identification processing, as well as methods to improve detection/identification accuracy for objects for which it is difficult to collect actual training data by generating images using deep learning.

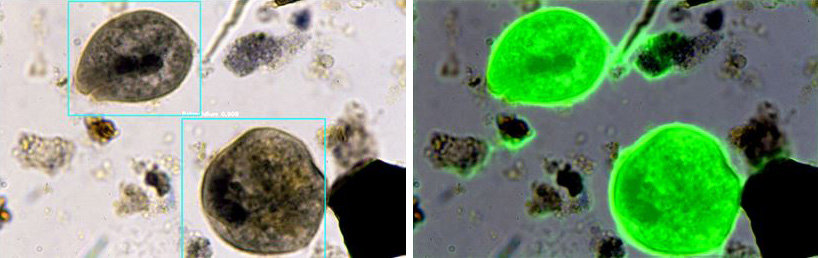

Detecting and identifying parasite by DNN

Detecting and identifying parasite by DNN

2. Innovation on Video Authoring Environemnt

Video cam that instructs

cinematography when shooting

Footage shot and edited by users who do not have specialized knowledge or skills in image production may convey affective information that includes nuances different from those intended by the creator, and this can cause discrepancies in communication between the creator and receiver of the image. In order to solve such problems, we are also conducting research on shooting and editing support as a technology that assists in the appropriate expression of affective information.

3. Innovation on HCI by Gaze Analysis

More effective information retrieval and recommendation are possible if information on the object of interest and the depth of interest when a person views image/video information can be conveyed to a computer system in a format that does not place an excessive load on the person. We are also studying to extract this information from eye movements and gaze information when a person acquires image/video information, and to apply it to information retrieval and recommendation.

Key publications

- Pengcheng Zeng and Atsuo Yoshitaka,"Visual Speech Recognition with Surrounding and Emotional Information", IEEE International Symposium on Multimedia, 8 pp., 2024.

- Genta Matsukawa and Atsuo Yoshitaka, "Data Augmentation with Diffusion Model for Hand Detection", IEEE International Symposium on Multimedia, 4 pp., 2024

- Han Lam, Khoa Pho, and Atsuo Yoshitaka, "AdVLO: Region selection via Attention-driven for Visual LiDAR Odometry", Proc. 15th Asian Conference on Intelligent Information and Database Systems, 12 pages, 2023.

Equiment

Eye tracking equipment (Nac, Tobii), Computational server in the laboratory, High Resolution Video recording and editing system, On-campus HPC System

Teaching policy

Research topics are decided with respect to the fields and content that students wish to study. In laboratory seminars, students are encouraged to actively discuss their research themes with each other, and are encouraged to constantly refine the content and completeness of their research by having them recognize their own direction to pursue and the way they design their research from a third person’s perspective. For example, we encourage students to submit papers to international journals and/or conferences, and support them in presenting and discussing their research contribution in English.

[Website] URL : https://www.jaist.ac.jp/is/labs/yoshitaka-lab/index_e.html